Samsung rallies on expectations of Nvidia’s HBM order

Nvidia CEO Jensen Huang hinted that the world’s No. 1 AI chip supplier will procure HBM chips from the Korean memory giant

By Mar 20, 2024 (Gmt+09:00)

LG Chem to sell water filter business to Glenwood PE for $692 million

KT&G eyes overseas M&A after rejecting activist fund's offer

Kyobo Life poised to buy Japan’s SBI Group-owned savings bank

StockX in merger talks with Naver’s online reseller Kream

Meritz backs half of ex-manager’s $210 mn hedge fund

SAN JOSE, Calif. – Samsung Electronics Co.'s stock zoomed on Wednesday after the world’s most famous AI chip provider Nvidia Corp. hinted that it may use the world’s top memory chipmaker’s high bandwidth memory (HBM) chips for its graphics processing units (GPU), an essential part in the artificial intelligence revolution.

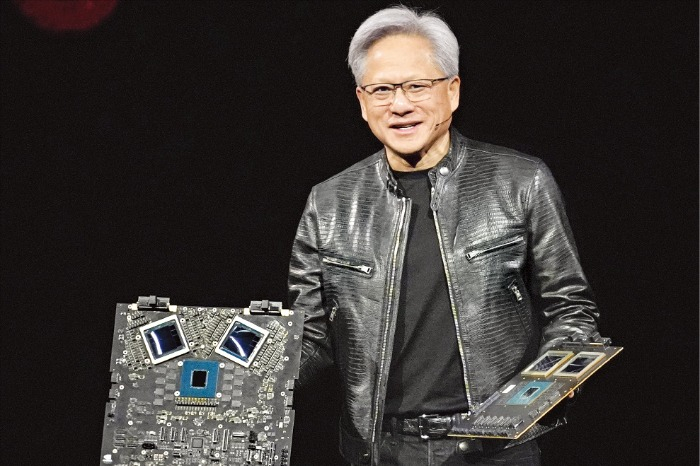

"HBM memory is very complicated and the value added is very high. We are spending a lot of money on HBM," Jensen Huang, Nvidia co-founder and chief executive officer, said at a media briefing on Tuesday in San Jose, California.

Huang said Nvidia is in the process of testing Samsung Electronics’ HBM chips, and he is looking forward to it.

"Samsung is very good, a very good company," Huang added.

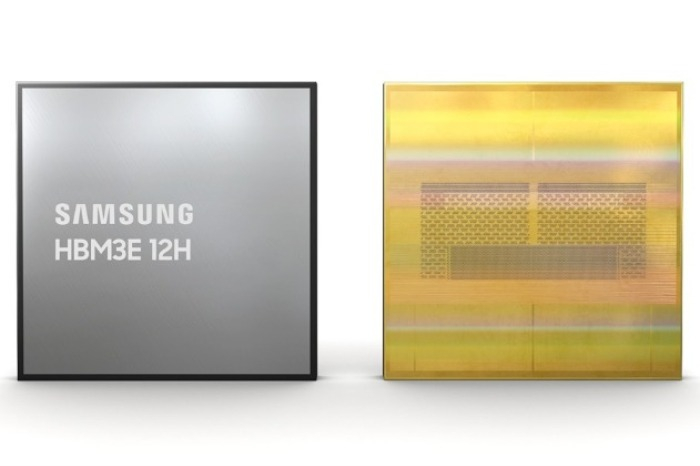

Investors took Nvidia CEO’s comment on Samsung HBM chips as a signal that the US AI chip juggernaut is about to order the Korean memory giant’s latest, fifth-generation HBM chips, HBM3E.

Heavyweight Samsung Electronics shares jumped 5.6% to end at 76,900 won ($57.39) on Wednesday, outperforming the broad market Kospi's 1.3% gain.

Last month, the Korean chip giant announced it developed the industry’s first 36-gigabyte (GB) 12-stack HBM3E, the fifth generation of its kind. The company plans to mass-produce the new HBM model in the first half of this year.

Samsung and Nvidia last summer worked together on technical verification for fourth-generation HBM3 chips and packaging services for the US company.

HEATED COMPETITION IN THE HBM MARKET

HBM is a high-value, high-performance memory chip made of vertically interconnected multiple DRAM chips, which significantly speed up data processing compared with general DRAM products while reducing latency, power consumption and size.

Its demand is rapidly growing as such high-performance chips are essential in running AI chips such as Nvidia’s GPUs, which are at the center of the AI revolution.

Currently, Samsung’s crosstown rival SK Hynix Inc., the world’s No. 2 memory chipmaker, is the unrivaled leader in the segment, controlling about 90% of the entire global HBM3 supply.

It is assumed to be the sole provider of HBM3 chips to Nvidia for its GPUs.

On Tuesday, SK Hynix announced that it has already begun mass production of HBM3E chips for the first time in the industry to supply Nvidia. The company announced in August last year that it developed the fifth-generation HBM chips and delivered samples to Nvidia.

"The upgrade cycle for Samsung and SK Hynix is incredible," Huang told reporters at the media briefing Tuesday. "As soon as Nvidia starts growing, they grow with us."

"I value our partnership with SK Hynix and Samsung very incredibly," he added.

The two Korean memory giants are in fierce competition to take the lead in the burgeoning HBM chip market.

Especially, Samsung Electronics has been heavily investing in high-value, high-performance memory chip development to catch up with SK Hynix.

HBM sales are forecast to account for 20.1% of total global dynamic random-access memory (DRAM) revenue this year, significantly rising from 8.4% in 2023 and 2.6% in 2022, according to industry tracker TrendForce.

Despite its distant lead, SK Hynix shares erased some of their recent gains on the news that Samsung Electronics is set to join the list of Nvidia’s HBM suppliers.

SK Hynix lost 2.3% to close at 156,500 won on Wednesday.

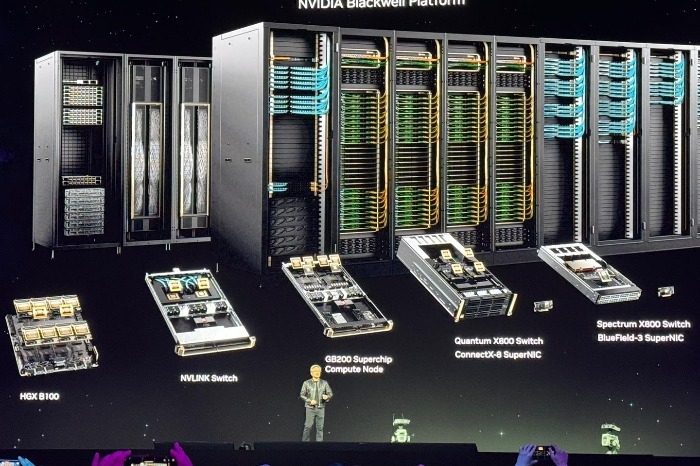

Nvidia's CEO unveiled his company’s latest chips on Monday, the first day of Nvidia's four-day annual conference, officially known as GTC, short for the GPU Technology Conference, held in San Jose.

The new chips, code-named Blackwell, are the successors of the company’s widely successful H100, which has been in short supply due to the AI fad driven by the generative AI sensation.

Nvidia is currently the matchless leader in the AI chip sector with more than 80% of the market share in AI computation.

Write to Jin-seok Choi at iskra@hankyung.com

Sookyung Seo edited this article.

-

Korean chipmakersSK Hynix mass-produces HBM3E chip to supply Nvidia

Korean chipmakersSK Hynix mass-produces HBM3E chip to supply NvidiaMar 19, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung doubles down in HBM race with largest memory

Korean chipmakersSamsung doubles down in HBM race with largest memoryFeb 27, 2024 (Gmt+09:00)

3 Min read -

EarningsSK Hynix swings to profit; sees 60% surge in HBM demand

EarningsSK Hynix swings to profit; sees 60% surge in HBM demandJan 25, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung Elec to launch HBM4 in 2025 to win war in AI sector

Korean chipmakersSamsung Elec to launch HBM4 in 2025 to win war in AI sectorOct 10, 2023 (Gmt+09:00)

2 Min read -

Korean chipmakersHBM market to nearly double; next-gen DRAM to revive demand: KIW

Korean chipmakersHBM market to nearly double; next-gen DRAM to revive demand: KIWSep 11, 2023 (Gmt+09:00)

4 Min read -

Korean chipmakersSK Hynix, Samsung's fight for HBM lead set to escalate on AI boom

Korean chipmakersSK Hynix, Samsung's fight for HBM lead set to escalate on AI boomSep 03, 2023 (Gmt+09:00)

4 Min read -

Korean chipmakersSamsung Elec to provide HBM3, packaging service to Nvidia

Korean chipmakersSamsung Elec to provide HBM3, packaging service to NvidiaAug 01, 2023 (Gmt+09:00)

5 Min read