SK Hynix, Samsung's fight for HBM lead set to escalate on AI boom

SK Hynix’s DRAM market share jumped to 31.9% in Q2, while Samsung Electronics’ dropped to 38.2%

By Sep 03, 2023 (Gmt+09:00)

LG Chem to sell water filter business to Glenwood PE for $692 million

KT&G eyes overseas M&A after rejecting activist fund's offer

Kyobo Life poised to buy Japan’s SBI Group-owned savings bank

StockX in merger talks with Naver’s online reseller Kream

Meritz backs half of ex-manager’s $210 mn hedge fund

Long-time runner-up SK Hynix Inc. significantly narrowed the market share gap with global memory leader Samsung Electronics Co. to 6.3 percentage points, the slimmest margin in a decade, in the second quarter thanks to brisk sales of its high-value and high-performance memory HBM products driven by the unfading generative AI boom.

SK Hynix earned $3.4 billion in DRAM sales in the April-June period, up a whopping 49% from the previous quarter, according to global technology industry tracker Omdia on Sunday. Global DRAM sales reached $10.7 billion in the quarter, up 15% from the previous quarter but down 57% from the year previous.

Thanks to the strong sales, the Korean DRAM major snatched back the No. 2 title from US rival Micron Technology Inc. in the DRAM market, with a 31.9% share, which is 7.2 percentage points higher than the first quarter.

This narrowed the runner-up’s gap with market leader Samsung Electronics to 6.3 percentage points from 18.1 percentage points in the first quarter, according to Omdia.

Samsung Electronics retained the top position with DRAM sales of $4.1 billion in the second quarter but its sales added a mere 3% on-quarter and its market share contracted to 38.2% from 42.8%.

It was the first time that Samsung Electronics’ DRAM market share dipped below 40% since 2013 when it was 36.2%, while SK Hynix’s share remained below 30% over the past decade.

HBM POISED TO TIP BALANCE

SK Hynix has regained the No. 2 position thanks to the ongoing generative AI sensation.

To guarantee seamless operation of generative AI, GPUs also need multiple high bandwidth memory (HBM) chips.

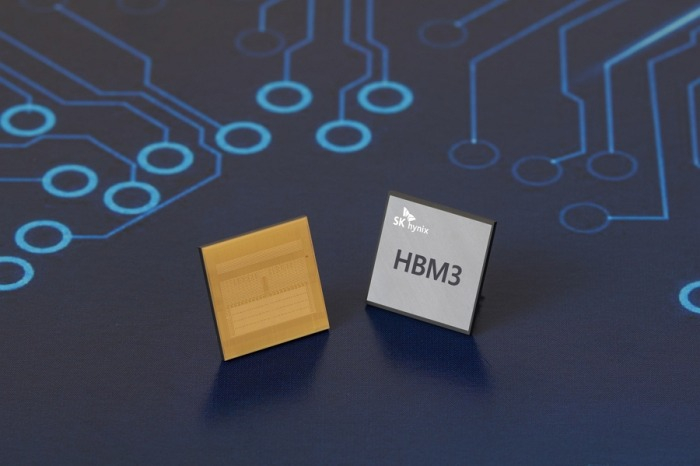

HBM is a high-value and high-performance memory that vertically interconnects multiple DRAM chips, dramatically increasing data processing speed compared with general DRAM products.

SK Hynix is the world’s first to develop HBM chips and the exclusive provider of HBM3, the fourth-generation HBM, to Nvidia Corp., the global GPU leader.

As it has provided the samples of HBM3E, the fifth-generation HBM considered the industry’s best-performing DRAM chip for AI applications, to Nvidia for performance evaluation, its lead in the HBM market is expected to continue for a while, market analysts said.

Especially, the ongoing strong AI momentum is expected to bring in some changes to the DRAM market, boding well for SK Hynix.

Omdia noted that the AI boom has led to a rise in sales of HBM products and other high-performance DRAM chips with 128 gigabytes (GB) and higher, slowing the retreat in overall DRAM prices.

It projects 100% growth in HBM demand this year and next, revised up from its earlier forecast of 50% growth, driven by the AI boom.

Nvidia backs the brighter DRAM market outlook after it surprised the market with stellar earnings in the fiscal second quarter from May to July. Its sales and earnings per share for the quarter exceeded market consensus by 20% and 30%, respectively.

SAMSUNG GOES ALL OUT

Global DRAM leader Samsung Electronics was, however, late in developing HBM, trailing behind SK Hynix in HBM shipments.

But it is readying to catch up to SK Hynix with massive investments in developing high-value AI chips so it can ward off the crosstown rival's ascent in the broader DRAM market.

According to sources in the semiconductor industry on Friday, the world’s largest DRAM maker is expected to soon supply its own HBM3 chips to Nvidia after supplying its samples to the US graphics chip leader last month for performance evaluation.

Its HBM3 chips are expected to be used in Nvidia’s A100 and H100 Tensor Core GPUs, a move that could dent SK Hynix’s dominance in the HBM market, according to sources.

Samsung Electronics is known to be already supplying its HBM3 chips to another US fabless chip designer Advanced Micro Devices Inc. (AMD).

Separately, Samsung Electronics has also developed the industry’s first and highest-capacity 32 gigabit (Gb) DDR5 DRAM, using the industry’s leading 12-nanometer process technology, the company announced on Friday.

The company said the latest development is ideal for various AI applications with a capacity that is double that of the 16 Gb DDR5 but in the same package size.

It is also known to boast 10% higher electricity efficiency than its predecessor.

Write to Jeong-Soo Hwang at hjs@hankyung.com

Sookyung Seo edited this article.

-

Korean chipmakersSamsung set to supply HBM3 to Nvidia, develops 32 Gb DDR5 chip

Korean chipmakersSamsung set to supply HBM3 to Nvidia, develops 32 Gb DDR5 chipSep 01, 2023 (Gmt+09:00)

4 Min read -

Korean chipmakersSK Hynix leads DRAM industry’s Q2 revenue rebound, retakes No. 2 spot

Korean chipmakersSK Hynix leads DRAM industry’s Q2 revenue rebound, retakes No. 2 spotAug 25, 2023 (Gmt+09:00)

2 Min read -

Korean chipmakersSK Hynix provides samples of best-performing HBM3E chip to Nvidia

Korean chipmakersSK Hynix provides samples of best-performing HBM3E chip to NvidiaAug 21, 2023 (Gmt+09:00)

3 Min read -

EarningsSamsung Elec shares regain momentum after narrower chip loss in Q2

EarningsSamsung Elec shares regain momentum after narrower chip loss in Q2Jul 27, 2023 (Gmt+09:00)

6 Min read -

EarningsSK Hynix logs loss for 3rd straight quarter in Q2 but eyes recovery in H2

EarningsSK Hynix logs loss for 3rd straight quarter in Q2 but eyes recovery in H2Jul 26, 2023 (Gmt+09:00)

4 Min read -

Korean chipmakersSamsung, SK Hynix in next-generation HBM chip supremacy war

Korean chipmakersSamsung, SK Hynix in next-generation HBM chip supremacy warJul 10, 2023 (Gmt+09:00)

3 Min read -

Korean chipmakersSK Hynix unveils industry’s slimmest 12-layer, 24 GB HBM3 chip

Korean chipmakersSK Hynix unveils industry’s slimmest 12-layer, 24 GB HBM3 chipApr 20, 2023 (Gmt+09:00)

2 Min read