Samsung set to supply HBM3 to Nvidia, develops 32 Gb DDR5 chip

The expected deal, which follows a similar contract with AMD, will solidify its memory leadership in the AI era, analysts say

By Sep 01, 2023 (Gmt+09:00)

LG Chem to sell water filter business to Glenwood PE for $692 million

KT&G eyes overseas M&A after rejecting activist fund's offer

Kyobo Life poised to buy Japan’s SBI Group-owned savings bank

StockX in merger talks with Naver’s online reseller Kream

Meritz backs half of ex-manager’s $210 mn hedge fund

Samsung Electronics Co. is expected to soon supply high-performance DRAM chips, HMB3, to US graphics chip designer Nvidia Corp. – a move that will strengthen Samsung’s presence in the fast-growing artificial intelligence (AI) chip segment.

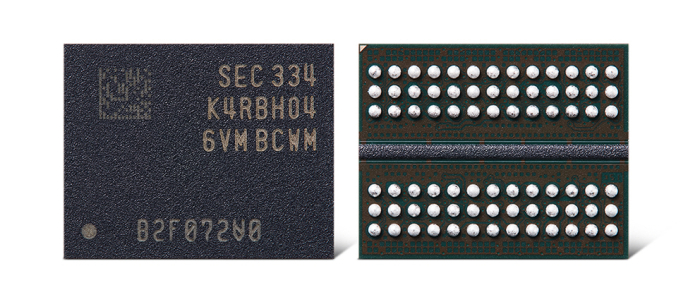

Separately, Samsung, the world’s largest memory chipmaker, said on Friday that it has developed the industry’s first and highest-capacity 32 gigabit (Gb) DDR5 DRAM, using the industry’s leading 12-nanometer process technology.

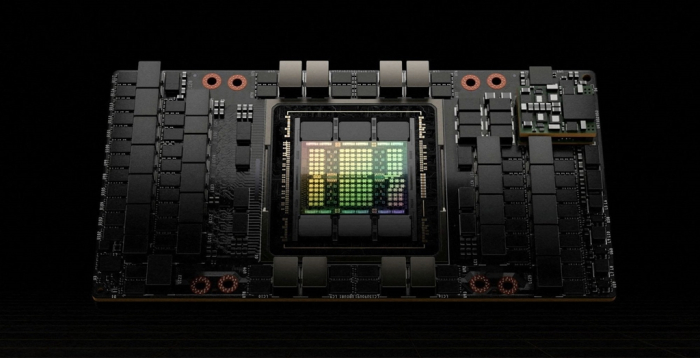

The Suwon, South Korea-based company last month provided Nvidia with samples of its fourth-generation High Bandwidth Memory3 chips for quality verification on Nvidia’s A100 and H100 Tensor Core graphics processing units (GPUs).

With the completion of the quality tests, Samsung will likely start supplying its HBM3 chips to Nvidia as early as October, industry sources said.

The two companies have also agreed on Samsung’s supply of HBM3 chips for next year, sources said. Samsung will likely supply about 30% of Nvidia’s HBM3 needs in 2024, they said.

Nvidia has so far received HBM3 chips exclusively from SK Hynix Inc., the world’s second-largest memory chipmaker and Samsung’s crosstown rival.

SK Hynix said last month it provided samples of a new high-performance chip, HBM3E, to Nvidia for evaluation.

Nvidia produces GPUs used in generative AI devices such as ChatGPT, a chatbot developed by Microsoft Corp.-backed OpenAI, which requires semiconductors that can process large data quickly.

ChatGPT is known to use about 10,000 units of Nvidia’s A100 chip. HBM3 DRAM is a key component in A100.

SAMSUNG’S DEAL WITH AMD

Samsung is also known to be supplying its HBM3 chips to US fabless chip designer Advanced Micro Devices Inc. (AMD) following a successful verification test on AMD’s Instinct MI300X accelerators.

Given its supply deals with Nvidia and AMD, Samsung’s global HBM chip market share will likely be over 50% next year, industry officials said.

The HBM series of DRAM is in growing demand as the chips power generative AI devices that operate on high-performance computing systems.

Such chips are used for high-performance data centers as well as machine learning platforms that enhance the AI and super-computing performance levels.

HBM3 is said to have a capacity 12 times higher and a bandwidth 13 times higher than GDDR6, the latest DRAM product.

CHIP PACKAGING DEALS WITH NVIDIA, AMD

Sources said Samsung is also in advanced talks to offer chip packaging services for Nvidia’s GPUs and AMD’s central processing units (CPUs).

Packaging, one of the final steps in semiconductor manufacturing, places chips in a protective case to prevent corrosion and provides an interface to combine and connect already-made chips.

Nvidia has been heavily relying on Taiwan Semiconductor Manufacturing Co. (TSMC) for its chip packaging. With TSMC’s packaging process lines almost fully booked, however, Nvidia and other fabless clients are resorting to other foundry players.

The global advanced packaging market is forecast to surge 74% to $65 billion by 2027 from $37.4 billion in 2021, according to industry consulting firm Yole Intelligence.

Citi Global Markets said on Friday that Samsung’s HBM3 deals would boost its profits next year.

Citi analyst Lee Se-cheol said he expects Samsung’s 2024 operating profit to rise 7% from this year.

Citi also raised its target price for Samsung’s shares to 120,000 won from 110,000 won earlier. Shares of Samsung finished 6.1% higher at 71,000 won on Friday, outperforming the benchmark Kospi’s 0.3% gain.

BREAKTHROUGH WITH 32 Gb DDR5 DRAM

Separately, Samsung on Friday unveiled a 12 nm-class 32 Gb double data rate (DDR)5 DRAM, which it said is ideal for various AI applications.

The latest memory, double the capacity of the 16 Gb DDR5 but in the same package size, is the industry’s highest capacity for a single DRAM chip. It has also improved electricity efficiency by 10% compared with 16 Gb products.

The chipmaker began mass production of 16 Gb DDR5 chips with the industry’s most advanced 12 nm process node in May.

Having developed its first 64-kilobit (Kb) DRAM in 1983, Samsung has come a long way to enhance its DRAM capacity by a factor of 500,000 over the last 40 years.

“With our 12 nm-class 32Gb DRAM, we have secured a solution that will enable DRAM modules of up to 1 terabyte (TB), allowing us to be ideally positioned to serve the growing need for high-capacity DRAM in the era of AI and big data,” said Hwang Sang-joon, executive vice president of DRAM Product & Technology at Samsung.

Samsung said it will continue expanding its lineup of high-capacity DRAM to retain its market leadership in the AI era.

According to market research firm IDC, the use of DRAM installed per server is expected to nearly double to 3.86 TB by 2027 from an estimated 1.93 TB this year.

Write to Ik-Hwan Kim and Jeong-Soo Hwang at lovepen@hankyung.com

In-Soo Nam edited this article.

-

Korean chipmakersSamsung set to supply HBM3, packaging services to AMD

Korean chipmakersSamsung set to supply HBM3, packaging services to AMDAug 22, 2023 (Gmt+09:00)

1 Min read -

Korean chipmakersSK Hynix provides samples of best-performing HBM3E chip to Nvidia

Korean chipmakersSK Hynix provides samples of best-performing HBM3E chip to NvidiaAug 21, 2023 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung Elec to provide HBM3, packaging service to Nvidia

Korean chipmakersSamsung Elec to provide HBM3, packaging service to NvidiaAug 01, 2023 (Gmt+09:00)

5 Min read -

Korean chipmakersSamsung rolls out industry’s finest 12 nm DDR5 DRAM chips

Korean chipmakersSamsung rolls out industry’s finest 12 nm DDR5 DRAM chipsMay 18, 2023 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung, AMD extend partnership for next-generation graphic chips

Korean chipmakersSamsung, AMD extend partnership for next-generation graphic chipsApr 06, 2023 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung unveils industry’s first 12 nm DRAM, compatible with AMD

Korean chipmakersSamsung unveils industry’s first 12 nm DRAM, compatible with AMDDec 21, 2022 (Gmt+09:00)

2 Min read -

Korean chipmakersSamsung to make 3 nm chips for Nvidia, Qualcomm, IBM, Baidu

Korean chipmakersSamsung to make 3 nm chips for Nvidia, Qualcomm, IBM, BaiduNov 22, 2022 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung clinches 2nd deal to make Nvidia’s latest gaming chips

Korean chipmakersSamsung clinches 2nd deal to make Nvidia’s latest gaming chipsDec 17, 2020 (Gmt+09:00)

3 Min read