Korean chipmakers

SK Hynix provides samples of best-performing HBM3E chip to Nvidia

Boasting the industryŌĆÖs fastest data processing speed, the chip is suitable for various AI applications

By Aug 21, 2023 (Gmt+09:00)

3

Min read

Most Read

Hankook Tire buys $1 bn Hanon Systems stake from Hahn & Co.

NPS to hike risky asset purchases under simplified allocation system

UAE to invest up to $1 bn in S.Korean ventures

Osstem to buy BrazilŌĆÖs No. 3 dental implant maker Implacil

US multifamily market challenges create investment opportunities

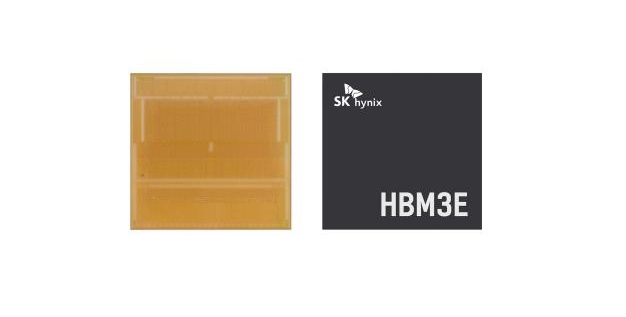

SK Hynix Inc., the worldŌĆÖs second-largest memory chipmaker after Samsung Electronics Co., said on Monday it has developed HBM3E, the industryŌĆÖs best-performing DRAM chip for artificial intelligence applications, and has provided samples to its client Nvidia Corp. for performance evaluation.

HBM3E, the extended version of HBM3, or high bandwidth memory 3, is fifth-generation DRAM memory, succeeding the previous generations ŌĆō HBM, HBM2, HBM2E and HMB3.

HBM is high-value, high-performance memory that vertically interconnects multiple DRAM chips, dramatically increasing data processing speed compared with earlier DRAM products.

SK Hynix said it plans to mass-produce the latest DRAM chip in the first half of next year to solidify its "unrivaled leadership in the AI memory market.ŌĆØ

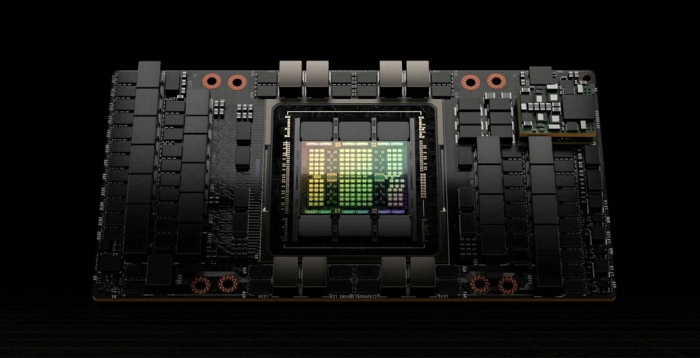

Industry sources said Nvidia will likely use SK Hynix's HBM3E in its next-generation AI accelerator GH200 due later next year.

The South Korean chipmaker said the latest product not only meets the industryŌĆÖs highest standards of speed ŌĆō the key specification for AI memory products ŌĆō but also offers better performance than rival products in terms of capacity, heat dissipation and user-friendliness.

The HBM3E chip can process data up to 1.15 terabytes (TBs) a second, equivalent to processing more than 230 full-HD movies of 5 gigabytes (GBs) in a single second.

SK Hynix said the product comes with a 10% improvement in heat dissipation by adopting technology called advanced mass reflow molded underfill (MR-MUF).

The latest chip also provides backward compatibility, enabling the adoption of the latest product even onto the system prepared for HBM3 chips without design or structure modification.

ŌĆ£We have a long history of working with SK Hynix on high bandwidth memory for leading-edge accelerated computing solutions,ŌĆØ said Ian Buck, vice president of Hyperscale and HPC Computing at Nvidia. ŌĆ£We look forward to continuing our collaboration with HBM3E to deliver the next generation of AI computing.ŌĆØ

SK HYNIX COMPETES WITH SAMSUNG FOR HBM3 CHIPS

The HBM series of DRAM is in growing demand as the chips power generative AI devices that operate on high-performance computing systems.

Such chips are used for high-performance data centers as well as machine learning platforms that enhance the AI and super-computing performance level.

ŌĆ£By increasing the supply share of the high-value HBM products, SK Hynix will also seek a fast business turnaround,ŌĆØ said Ryu Sung-soo, head of DRAM Product Planning at SK Hynix.

SK Hynix was the first memory vendor to start mass production of the worldŌĆÖs first HBM3 in June 2022.

The company said at the time it will supply the HBM3 DRAM to US graphic chipmaker Nvidia┬ĀCorp. for its graphic processor unit H100.

Earlier this month, Samsung, the worldŌĆÖs top memory maker, said it is providing its HBM3 chip samples and chip packaging service to Nvidia for the US companyŌĆÖs graphics processing units.

Samsung is said to be unveiling its fifth-generation HBM3P, with its product name Snowbolt, by the end of this year, followed by its sixth-generation HBM product next year.

According to market research firm TrendForce, the global HBM market is forecast to grow to $8.9 billion by 2027 from $3.9 billion this year. HBM chips are at least five times more expensive than commoditized DRAM chips.

(Updated with a source comment on the possibility of Nvidia using the HBM3 chip in its next-generation AI accelerator and HBM market growth forecasts)

Write to Jeong-Soo Hwang at hjs@hankyung.com

In-Soo Nam edited this article.

More to Read

-

Korean chipmakersSamsung Elec to provide HBM3, packaging service to Nvidia

Korean chipmakersSamsung Elec to provide HBM3, packaging service to NvidiaAug 01, 2023 (Gmt+09:00)

5 Min read -

Korean stock marketFunds with high exposure to Nvidia, Samsung yield higher returns

Korean stock marketFunds with high exposure to Nvidia, Samsung yield higher returnsMay 31, 2023 (Gmt+09:00)

3 Min read -

Korean chipmakersSK Hynix unveils industryŌĆÖs slimmest 12-layer, 24 GB HBM3 chip

Korean chipmakersSK Hynix unveils industryŌĆÖs slimmest 12-layer, 24 GB HBM3 chipApr 20, 2023 (Gmt+09:00)

2 Min read -

Korean chipmakersSamsung to make 3 nm chips for Nvidia, Qualcomm, IBM, Baidu

Korean chipmakersSamsung to make 3 nm chips for Nvidia, Qualcomm, IBM, BaiduNov 22, 2022 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung clinches 2nd deal to make NvidiaŌĆÖs latest gaming chips

Korean chipmakersSamsung clinches 2nd deal to make NvidiaŌĆÖs latest gaming chipsDec 17, 2020 (Gmt+09:00)

3 Min read

Comment 0

LOG IN