SK Hynix, TSMC tie up to stay ahead of Samsung for HBM supremacy

The collaboration between the two rivals comes as global chipmakers race to secure their places amid the AI boom

By Apr 19, 2024 (Gmt+09:00)

LG Chem to sell water filter business to Glenwood PE for $692 million

KT&G eyes overseas M&A after rejecting activist fund's offer

Kyobo Life poised to buy Japan’s SBI Group-owned savings bank

StockX in merger talks with Naver’s online reseller Kream

Meritz backs half of ex-manager’s $210 mn hedge fund

South Korea’s SK Hynix Inc. said on Friday it is partnering with Taiwan Semiconductor Manufacturing Co. (TSMC) to jointly develop next-generation chips for artificial intelligence as the two chipmakers push to strengthen their positions in the fast-growing AI chip market.

The collaboration between the two competitors is also aimed at solidifying their leadership in the high-bandwidth memory (HBM) segment against fast followers, including Samsung Electronics Co., analysts said.

Although Samsung is the world’s top memory chipmaker, it lags crosstown rival SK Hynix, which has been leading the pack in the HBM DRAM segment for years.

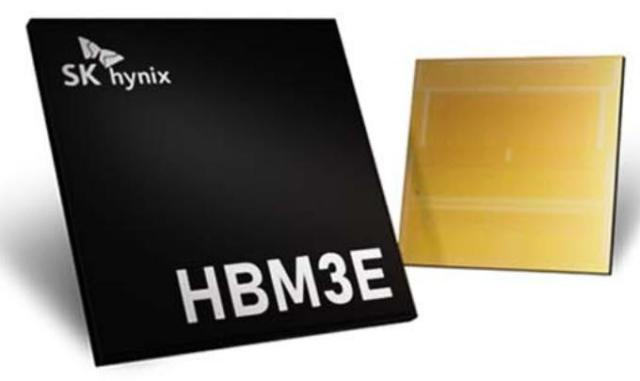

SK Hynix, the world's second-largest memory chipmaker, said in a statement it has signed a memorandum of understanding with TSMC, the world's largest foundry, or contract chip manufacturer, to collaborate on producing next-generation HBM chips.

SK Hynix dominates the production of HBM, critical for generative AI computing, while the Taiwanese foundry player’s advanced packaging technology helps HBM chips and graphic processing units (GPUs) work together efficiently.

Through the initiative, SK Hynix said it plans to proceed with its development of HBM4, or the sixth generation of the HBM family, slated to be mass-produced in 2026.

"We expect a strong partnership with TSMC to help accelerate our efforts for open collaboration with our customers and develop the industry's best-performing HBM4," said Justin Kim, president and head of AI Infra at SK Hynix. "With this cooperation in place, we will strengthen our market leadership as the total AI memory provider further by beefing up competitiveness in the space of the custom memory platform."

The announcement comes as global chipmakers race to take advantage of the AI boom, which is driving demand for logic semiconductors such as processors. SK Hynix and TSMC are key suppliers to Nvidia Corp., the leader in the AI chip market.

INITIAL FOCUS ON BASE DIE

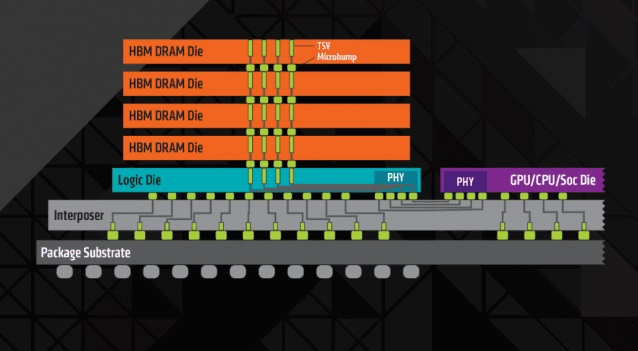

SK Hynix said the two companies will initially focus on improving the performance of the base die at the very bottom of the HBM package.

HBM is made by stacking a core DRAM die on top of a base die through processing technology called Through Silicon Via (TSV). The base die is connected to the GPU, which controls HBM chips.

SK Hynix said it has used proprietary technology to make base dies up to HBM3E, the fourth-generation DRAM memory, but plans to adopt TSMC’s advanced logic process for HBM4’s base die so additional functionality can be packed into limited space.

That helps SK Hynix produce customized HBM that meets a wide range of customer demand for performance and power efficiency, it said.

The two companies will also collaborate to optimize the integration of SK Hynix's HBM and TSMC's 2.5D packaging process, called CoWoS technology while cooperating in responding to common customer requests related to HBM.

“Looking ahead to the next-generation HBM4, we’re confident that we will continue to work closely in delivering the best-integrated solutions to unlock new AI innovations for our common customers,” said Kevin Zhang, senior vice president of TSMC’s Business Development and Overseas Operations Office.

SAMSUNG TURNS UP THE HEAT

A laggard in the sought-after HBM chips, Samsung has vowed to spend heavily to develop next-generation AI chips.

Last month, Kyung Kye-hyun, head of Samsung's semiconductor business, said it is developing a next-generation AI chip, Mach-1, to unveil a prototype by year-end.

Samsung said in February it developed HBM3E 12H, the industry's first 12-stack HBM3E DRAM and the highest-capacity HBM product to date. Samsung said it will start mass production of the chip in the first half of this year.

HBM has become an essential part of the AI boom, as it provides the much-needed faster processing speed compared with traditional memory chips.

Nvidia said last month it is qualifying Samsung's HBM chips for use in its products.

Currently, SK Hynix, Samsung and Micron Technology Inc. are capable of providing HBM chips that can be paired with powerful GPUs, like Nvidia's H100 systems, used for AI computing.

Market tracker TrendForce estimates SK Hynix is likely to secure a 52.5% share of the global HBM market this year, followed by Samsung at 42.4% and Micron at 5.1%.

Earlier this month, SK Hynix said it would spend $3.87 billion to build advanced AI chip packaging and research and development facilities in Indiana, which would be the first of its kind in the US and the company’s first overseas HBM chip plant.

Write to Jeong-Soo Hwang at hjs@hankyung.com

In-Soo Nam edited this article.

-

Korean chipmakersSamsung to unveil Mach-1 AI chip to upend SK Hynix’s HBM leadership

Korean chipmakersSamsung to unveil Mach-1 AI chip to upend SK Hynix’s HBM leadershipMar 20, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung set to triple HBM output in 2024 to lead AI chip era

Korean chipmakersSamsung set to triple HBM output in 2024 to lead AI chip eraMar 27, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung to supply $752 million in Mach-1 AI chips to Naver, replace Nvidia

Korean chipmakersSamsung to supply $752 million in Mach-1 AI chips to Naver, replace NvidiaMar 22, 2024 (Gmt+09:00)

4 Min read -

Korean chipmakersSamsung rallies on expectations of Nvidia’s HBM order

Korean chipmakersSamsung rallies on expectations of Nvidia’s HBM orderMar 20, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSK Hynix mass-produces HBM3E chip to supply Nvidia

Korean chipmakersSK Hynix mass-produces HBM3E chip to supply NvidiaMar 19, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSK Hynix bets on DRAM upturn with $7.6 bn spending; HBM in focus

Korean chipmakersSK Hynix bets on DRAM upturn with $7.6 bn spending; HBM in focusNov 09, 2023 (Gmt+09:00)

4 Min read -

Korean chipmakersSamsung to unveil 3D AI chip packaging tech SAINT to rival TSMC

Korean chipmakersSamsung to unveil 3D AI chip packaging tech SAINT to rival TSMCNov 12, 2023 (Gmt+09:00)

3 Min read