SK Hynix chief says no delay in 12-layer HBM3E supply as demand soars

TrendForce researcher Avril Wu expects the HBM market to grow 156% on-year to $46.7 billion in 2025

By Oct 23, 2024 (Gmt+09:00)

LG Chem to sell water filter business to Glenwood PE for $692 million

KT&G eyes overseas M&A after rejecting activist fund's offer

Kyobo Life poised to buy Japan’s SBI Group-owned savings bank

StockX in merger talks with Naver’s online reseller Kream

Meritz backs half of ex-manager’s $210 mn hedge fund

Kwak Noh-jung, chief executive of SK Hynix Inc., the world’s second-largest memory chipmaker, has said there will be no delay in the company’s planned mass production and supply of its most advanced AI chip, 12-layer HBM3E, to its clients, including Nvidia Corp.

“There’s no change in our schedule for the mass production of the 12-layer HBM3E by the end of the year. Everything’s going well in terms of shipment and supply timing,” he told reporters Tuesday on the sidelines of a Semiconductor Day event in Seoul.

His comments come as a senior researcher at TrendForce, the Taiwan-based semiconductor research firm, expects the market for HBM, or high-bandwidth memory, to continue to post strong growth next year, driven by soaring demand from Nvidia and other AI chipmakers.

ROBUST HBM CHIP DEMAND GROWTH

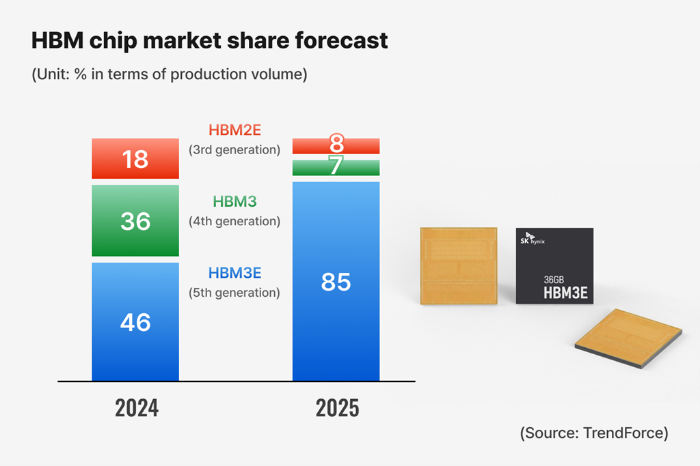

During the TrendForce Roadshow Korea held in Seoul on Tuesday, Avril Wu, senior vice president of research operations at TrendForce, said she expects the global HBM market to grow 156% next year, reaching $46.7 billion, from $18.2 billion this year. Its share of the overall DRAM market is forecast to rise from 20% this year to 34% in 2025.

From the perspective of major AI solution providers, there will be a significant shift in HBM specification requirements toward HBM3E, with an increase in 12-layer stack products anticipated, according to TrendForce. This shift, it added, is expected to drive up HBM capacity per chip.

Among HBM products, Wu said the share of HBM3E, the fifth-generation HBM chip, is forecast to increase to 85% in 2025 from 46% this year, mainly driven by Nvidia's Blackwell GPU.

She said Nvidia will continue to dominate the AI market next year, intensifying competition among major memory chipmakers such as Samsung Electronics Co., SK Hynix and Micron Technology Inc. to secure the US AI chip designer as a customer.

While companies like Advanced Micro Devices Inc. (AMD) ramp up their AI chip sales, Nvidia will consume 73% of the total HBM output in 2025, up from 58% this year, Wu said.

Google's HBM intake is expected to drop to 11% from 18%, and AMD's share to 7% from 8%, she said.

The TrendForce researcher predicted that samples of the sixth-generation HBM4 will be released later next year, with official adoption by companies like Nvidia expected in 2026.

SK HYNIX TIES UP WITH TSMC

Among memory chipmakers, SK Hynix is the biggest beneficiary of the explosive increase in AI adoption, as it dominates the production of HBM, critical to generative AI computing, and is the top supplier of AI chips to Nvidia.

SK Hynix controls about 52.5% of the overall HBM market. Its crosstown rival Samsung, the world’s top memory chipmaker, has a 42.4% market share.

In May, SK Hynix CEO Kwak said its HBM chips were sold out for this year and its capacity is almost fully booked for 2025.

To hold its lead, SK joined hands with Taiwan Semiconductor Manufacturing Co. (TSMC), the world’s top contract chipmaker, in April to develop the sixth-generation AI chip, HBM4.

SAMSUNG STRUGGLES

Currently, Samsung supplies fourth-generation 8-layer HBM3 chips to clients such as Nvidia, Google, AMD and Amazon Web Services Inc. and HBM2E chips to Chinese companies.

Samsung is still striving to gain Nvidia’s approval for its HBM3E chips.

As Samsung struggles to compete with its rivals in the HBM segment, Vice Chairman Jun Young-hyun, the head of Samsung’s DS division, which oversees its semiconductor business, has vowed to drastically cut its chip executive jobs and restructure semiconductor-related operations.

Samsung, which set up a dedicated HBM chip development team, has also forged a tie-up with TSMC for HBM4 chips.

Write to Eui-Myung Park at uimyung@hankyung.com

In-Soo Nam edited this article.

-

Corporate restructuringSamsung to cut chip executive jobs, restructure in battle versus SK Hynix

Corporate restructuringSamsung to cut chip executive jobs, restructure in battle versus SK HynixOct 10, 2024 (Gmt+09:00)

4 Min read -

Korean chipmakersSamsung Electronics, TSMC tie up for HBM4 AI chip development

Korean chipmakersSamsung Electronics, TSMC tie up for HBM4 AI chip developmentSep 05, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung, SK Hynix up the ante on HBM to enjoy AI memory boom

Korean chipmakersSamsung, SK Hynix up the ante on HBM to enjoy AI memory boomSep 04, 2024 (Gmt+09:00)

3 Min read -

EarningsSK Hynix to supply 12-layer HBM3E to Nvidia in Q4; profit soars in Q2

EarningsSK Hynix to supply 12-layer HBM3E to Nvidia in Q4; profit soars in Q2Jul 25, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung tipped to supply HBM3 to Nvidia for Chinese market

Korean chipmakersSamsung tipped to supply HBM3 to Nvidia for Chinese marketJul 24, 2024 (Gmt+09:00)

2 Min read -

Korean chipmakersHBM chip war intensifies as SK Hynix hunts for Samsung talent

Korean chipmakersHBM chip war intensifies as SK Hynix hunts for Samsung talentJul 08, 2024 (Gmt+09:00)

4 Min read -

Korean chipmakersSamsung launches dedicated HBM, advanced chip packaging teams

Korean chipmakersSamsung launches dedicated HBM, advanced chip packaging teamsJul 05, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSK Hynix works on next-generation HBM chip supply plans for 2025

Korean chipmakersSK Hynix works on next-generation HBM chip supply plans for 2025May 30, 2024 (Gmt+09:00)

3 Min read -

Executive reshufflesSamsung Electronics replaces chip head amid HBM crisis

Executive reshufflesSamsung Electronics replaces chip head amid HBM crisisMay 21, 2024 (Gmt+09:00)

4 Min read -

Korean chipmakersSK Hynix, Samsung set to benefit from explosive HBM sales growth

Korean chipmakersSK Hynix, Samsung set to benefit from explosive HBM sales growthMay 07, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSK Hynix’s HBM chip orders fully booked; 12-layer HBM3E in Q3: CEO

Korean chipmakersSK Hynix’s HBM chip orders fully booked; 12-layer HBM3E in Q3: CEOMay 02, 2024 (Gmt+09:00)

5 Min read -

Korean chipmakersSK Hynix, TSMC tie up to stay ahead of Samsung for HBM supremacy

Korean chipmakersSK Hynix, TSMC tie up to stay ahead of Samsung for HBM supremacyApr 19, 2024 (Gmt+09:00)

4 Min read