Samsung establishes HBM team to up AI chip production yields

ŌĆ£HBM leadership is coming to us,ŌĆØ Kyung Kye-hyun, Samsung's semiconductor business chief says

By Mar 29, 2024 (Gmt+09:00)

Alibaba eyes 1st investment in Korean e-commerce platform

Blackstone signs over $1 bn deal with MBK for 1st exit in Korea

NPS loses $1.2 bn in local stocks in Q1 on weak battery shares

OCI to invest up to $1.5 bn in MalaysiaŌĆÖs polysilicon plant

Korea's Lotte Insurance put on market for around $1.5 bn

Samsung Electronics Co., the worldŌĆÖs No. 1 memory chipmaker, recently set up a high bandwidth memory (HBM) team within the memory chip division to increase production yields as it is developing a sixth-generation AI memory HBM4 and AI accelerator Mach-1.

The new team is in charge of the development and sales of DRAM and NAND flash memory, according to industry sources on March 29.

Hwang Sang-joon, corporate executive vice president and head of DRAM Product and Technology at Samsung, will lead the new team. It has not yet been decided how many employees will work for the division.

It is SamsungŌĆÖs second HBM-dedicated team after the company launched the HBM taskforce team in January this year, composed of 100 talent from its device solutions division.

Samsung is ramping up efforts to upend its local rival SK Hynix Inc., the dominant player in the advanced HBM segment. In 2019, Samsung disbanded the then-HBM team on the conclusion that the HBM market would not grow significantly, a painful mistake it regrets.

TWO-TRACK STRATEGY

To grab the lead in the AI chip market, Samsung will pursue a ŌĆ£two-trackŌĆØ strategy of simultaneously developing two types of cutting-edge memory chips: HBM and Mach-1.

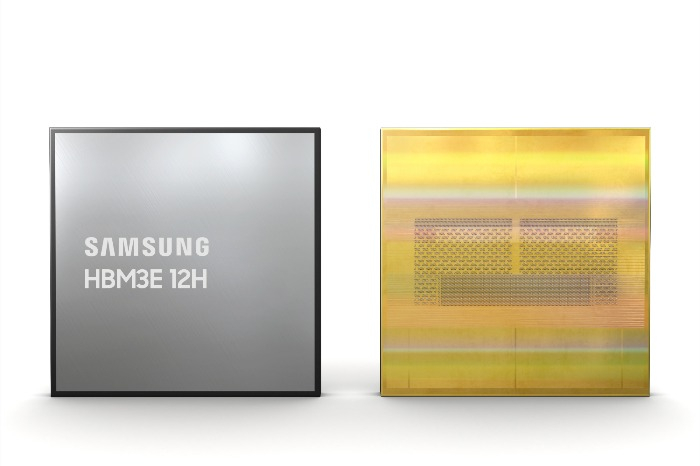

It plans to mass-produce HBM3E in the second half of this year and produce its follow-up model HBM4 in 2025.

Currently, HBM3E is the best-performing DRAM for AI applications and a fifth-generation DRAM memory, succeeding the previous generations: HBM, HBM2, HBM2E and HMB3.

ŌĆ£Customers who want to develop customized HBM4 will work with us,ŌĆØ Kyung Kye-hyun, head of Samsung's semiconductor business, said┬Āin a note posted on a social media platform on Friday.

ŌĆ£HBM leadership is coming to us thanks to the dedicated teamŌĆÖs efforts,ŌĆØ he added.

At Memcon 2024, a gathering of global chipmakers, held in San Jose, California on Tuesday, Samsung's Hwang said he expects the company to increase its HBM chip production volume by 2.9 times this year, compared to last yearŌĆÖs output.

HBM is a high-performance memory chip stacking multiple DRAMs vertically and an essential component of AI chips in processing great volumes of data.

According to Yole Group, a French IT research firm, the HBM market is forecast to expand to $19.9 billion in┬Ā 2025 and $37.7 billion in 2029, compared to an estimated $14.1 billion in 2024.

Last week, Kyung said at its annual general meeting that the Mach-1 AI chip is currently under development and the company plans to produce a prototype by year-end.

Mach-1 is in the form of a system-on-chip (SoC) that reduces the bottleneck between the graphics processing unit (GPU) and HBM chips.

Samsung is also preparing to develop Mach-2, a next-generation model of inference-committed AI accelerator Mach-1.

ŌĆ£We need to accelerate the development of Mach-2, for which clients are showing strong interest,ŌĆØ Kyung said in the note on Friday.

Write to Jeong-Soo Hwang at hjs@hankyung.com

Yeonhee Kim edited this article.┬Ā

-

Korean chipmakersSamsung set to triple HBM output in 2024 to lead AI chip era

Korean chipmakersSamsung set to triple HBM output in 2024 to lead AI chip eraMar 27, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung to unveil Mach-1 AI chip to upend SK HynixŌĆÖs HBM leadership

Korean chipmakersSamsung to unveil Mach-1 AI chip to upend SK HynixŌĆÖs HBM leadershipMar 20, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung rallies on expectations of NvidiaŌĆÖs HBM order

Korean chipmakersSamsung rallies on expectations of NvidiaŌĆÖs HBM orderMar 20, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSK Hynix mass-produces HBM3E chip to supply Nvidia

Korean chipmakersSK Hynix mass-produces HBM3E chip to supply NvidiaMar 19, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSK Hynix explores HBM chip collaboration with Kioxia

Korean chipmakersSK Hynix explores HBM chip collaboration with KioxiaMar 04, 2024 (Gmt+09:00)

2 Min read -

Korean chipmakersSamsung doubles down in HBM race with largest memory

Korean chipmakersSamsung doubles down in HBM race with largest memoryFeb 27, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung showcases HBM3E DRAM, automotive chips

Korean chipmakersSamsung showcases HBM3E DRAM, automotive chipsOct 21, 2023 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung Elec to launch HBM4 in 2025 to win war in AI sector

Korean chipmakersSamsung Elec to launch HBM4 in 2025 to win war in AI sectorOct 10, 2023 (Gmt+09:00)

2 Min read