Korean chipmakers

Samsung, SK pin hopes on HBM sales with Nvidia's new AI chip

Nvidia will launch H200 GPU, an advanced AI chipset that needs high-quality HBM, in the second quarter of 2024

By Nov 14, 2023 (Gmt+09:00)

2

Min read

Most Read

LG Chem to sell water filter business to Glenwood PE for $692 million

KT&G eyes overseas M&A after rejecting activist fund's offer

Kyobo Life poised to buy Japan’s SBI Group-owned savings bank

StockX in merger talks with Naver’s online reseller Kream

Meritz backs half of ex-manager’s $210 mn hedge fund

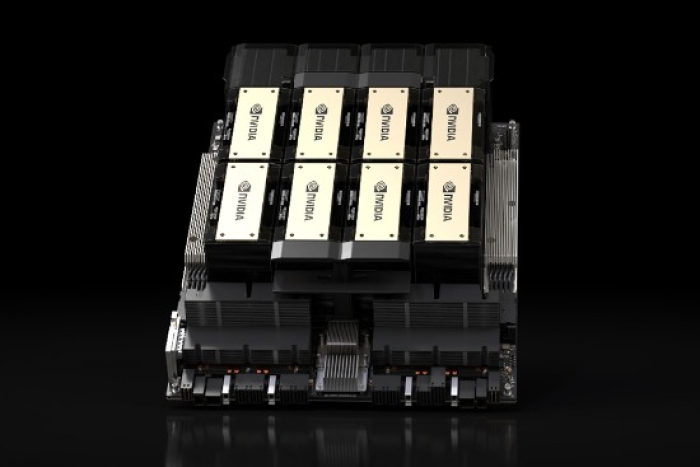

South Korean memory chipmakers like Samsung Electronics Co. and SK Hynix Inc. have high hopes for fast growth in their high-bandwidth memory (HBM) sales as US fabless chip designer Nvidia Corp. on Monday introduced its new artificial intelligence chipset H200 Tensor Core GPU.

The new AI chipset is the first graphic processing unit (GPU) to offer 141 gigabytes (GB) of HBM3e memory at 4.8 terabytes (TB) per second, nearly double the capacity and 2.4 times more bandwidth compared with its predecessor H100, Nvidia stated on Nov. 13.

The advanced memory of H200 can handle massive amounts of data for generative AI and high-performance computing workloads, Nvidia added.

The H200 will be available from global system manufacturers and cloud service providers in the second quarter of next year, Nvidia said. The firm didn’t disclose the price range of the new GPU.

Global tech giants like Amazon Web Services Inc., Microsoft Corp. and Google LLC will use H200 to advance their AI services, market insiders said.

The launch of H200 is forecast to fuel competition between Nvidia and its crosstown rival Advanced Micro Devices Inc. (AMD), which unveiled Instinct MI300X accelerators in June of this year.

At the time, AMD claimed that MI300X offers 2.4 times the memory density of the Nvidia H100 and more than 1.6 times the bandwidth. The Instinct MI300X chip is scheduled to be launched on Dec. 6.

Samsung and SK Hynix, the world’s two largest memory chipmakers, are preparing to increase their HBM production by up to 2.5 times amid intensifying competition for AI chips.

Samsung Electronics has also completed negotiations with its major clients on HBM supplies for next year, Kim Jae-joon, Samsung’s vice president of the memory chip business said in October.

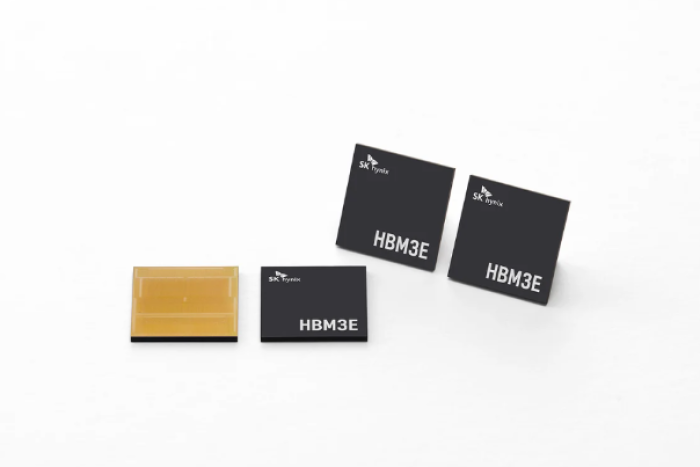

During SK Hynix’s third-quarter earnings conference call last month, Park Myoung-soo, vice president and head of DRAM marketing of the firm, said that the company’s planned sale of HBM3 and upgraded HBM3E chips to its clients for 2024 has fully booked.

Samsung is supplying HBM3 and turnkey packaging services to AMD. The world’s top memory chipmaker also passed its HBM3 quality verification on Nvidia’s A100 and H100 in late August, according to industry sources; it hasn't officially confirmed yet whether it will supply HBM3 to Nvidia.

SK Hynix, the world’s second-largest memory chipmaker after Samsung, provided samples of HBM3E, the extended version of HBM3, to Nvidia in August. SK Hynix is supplying HBM3 to AMD.

Write to Jeong-Soo Hwang and Jin-seok Choi at hjs@hankyung.com

Jihyun Kim edited this article.

More to Read

-

Artificial intelligenceNvidia invests in Korean generative AI startup Twelve Labs

Artificial intelligenceNvidia invests in Korean generative AI startup Twelve LabsOct 24, 2023 (Gmt+09:00)

4 Min read -

Korean chipmakersSamsung set to supply HBM3 to Nvidia, develops 32 Gb DDR5 chip

Korean chipmakersSamsung set to supply HBM3 to Nvidia, develops 32 Gb DDR5 chipSep 01, 2023 (Gmt+09:00)

4 Min read -

Korean chipmakersSamsung set to supply HBM3, packaging services to AMD

Korean chipmakersSamsung set to supply HBM3, packaging services to AMDAug 22, 2023 (Gmt+09:00)

1 Min read -

Korean chipmakersSK Hynix provides samples of best-performing HBM3E chip to Nvidia

Korean chipmakersSK Hynix provides samples of best-performing HBM3E chip to NvidiaAug 21, 2023 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung, AMD extend partnership for next-generation graphic chips

Korean chipmakersSamsung, AMD extend partnership for next-generation graphic chipsApr 06, 2023 (Gmt+09:00)

3 Min read

Comment 0

LOG IN