Korean chipmakers

Samsung set to triple HBM output in 2024 to lead AI chip era

The forecast is higher than SamsungŌĆÖs January projection of doubling its HBM chip volume this year

By Mar 27, 2024 (Gmt+09:00)

3

Min read

Most Read

Alibaba eyes 1st investment in Korean e-commerce platform

Blackstone signs over $1 bn deal with MBK for 1st exit in Korea

NPS loses $1.2 bn in local stocks in Q1 on weak battery shares

OCI to invest up to $1.5 bn in MalaysiaŌĆÖs polysilicon plant

Korea's Lotte Insurance put on market for around $1.5 bn

SILICON VALLEY ŌĆō Samsung Electronics Co., the worldŌĆÖs largest memory chipmaker, will likely triple its high bandwidth memory (HBM) chip production volume this year from last yearŌĆÖs output as it aims to take the lead in the artificial intelligence chip segment.

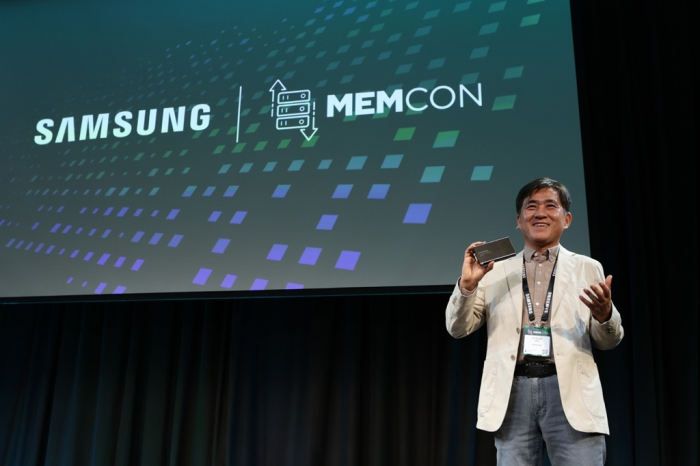

At Memcon 2024, a gathering of global chipmakers, held in San Jose, California on Tuesday, Hwang Sang-joong, corporate executive vice president and head of DRAM Product and Technology at Samsung, said he expects the company to increase its HBM chip production volume by 2.9 times this year compared to last yearŌĆÖs output.

The forecast is higher than SamsungŌĆÖs projection unveiled at CES 2024 early this year that the chipmaker will likely produce 2.5 times more HBM chips in 2024.

ŌĆ£Following the third-generation HBM2E and fourth-generation HBM3, which are already in mass production, we plan to produce the 12-layer fifth-generation HBM and 32 gigabit-based 128 GB DDR5 products in large quantities in the first half of the year,ŌĆØ Hwang said at Memcon 2024.

ŌĆ£With these products, we expect to enhance our presence in high-performance, high-capacity memory in the AI era.ŌĆØ

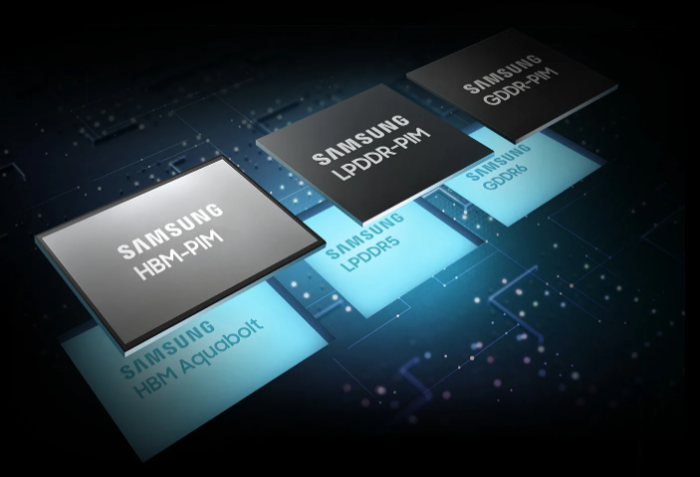

At the conference, Samsung unveiled its HMB roadmap, which envisions 13.8 times more HBM shipments in 2026 than the 2023 output. Annual HBM output volumes will rise further to 23.1 times the 2023 level by 2028, it said.

In HBM4, the sixth-generation HBM chip code-named ŌĆ£Snowbolt,ŌĆØ Samsung plans to apply the buffer die, a control device, to the bottom layer of stacked memory for efficiency.

At the conference, Samsung allowed participants to demonstrate its latest HBM3E 12H chip ŌĆō the industryŌĆÖs first 12-stack HBM3E DRAM, marking a breakthrough with the highest capacity ever achieved in HBM technology.

Samsung is currently sampling its HBM3E 12H chips to customers and plans to start mass production in the first half.

The conference participants included SK Hynix Inc., Microsoft, Meta Platforms, Nvidia and AMD.

CXL TECHNOLOGY AT MEMCON 2024

Samsung also unveiled at Memcon 2024 the expansion of its Compute Express Link (CXL) memory module portfolio, showcasing its technology in high-performance and high-capacity solutions for AI applications.

In a keynote address, Choi Jin-hyeok, corporate executive vice president of SamsungŌĆÖs Device Solutions Research America, said Samsung is committed to collaborating with its partners to unlock the full potential of the AI era.

ŌĆ£AI innovation cannot continue without memory technology innovation. As the memory market leader, Samsung is proud to continue advancing innovation, from the industryŌĆÖs most advanced CMM-B technology to powerful memory solutions like HBM3E for high-performance computing and demanding AI applications.ŌĆØ

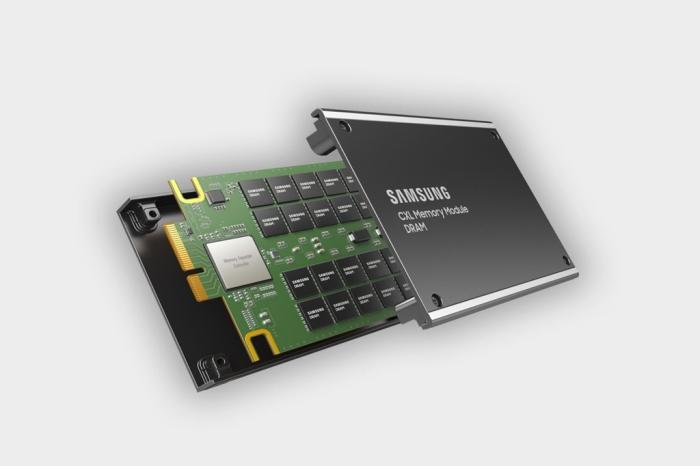

Highlighting growing momentum in the CXL ecosystem, Samsung introduced its CXL Memory Module ŌĆō Box (CMM-B), a cutting-edge CXL DRAM memory product.

The chipmaker also showcased CXL Memory Module Hybrid for Tiered Memory (CMM-H TM) and CXL Memory Module ŌĆō DRAM (CMM-D) technology.

CXL is a next-generation interface that adds efficiency to accelerators, DRAM and storage devices used with central processing units (CPUs) in high-performance server systems.

LAGGARD IN HBM SEGMENT

A laggard in the HBM chip segment, Samsung has heavily invested in HBM to rival SK Hynix and other memory players.

HBM has become essential to the AI boom as it provides faster processing speed than traditional memory chips.

Last week, Kyung Kye-hyun, head of Samsung's semiconductor business, said the company is developing Mach-1, its next-generation AI chip, with which it aims to upend its crosstown rival┬ĀSK Hynix, the dominant player in the advanced HBM segment.

The company said it has already agreed to supply Mach-1 chips to Naver Corp.┬Āby the end of this year in a deal worth up to 1 trillion won ($752 million).

With the contract, Naver hopes to significantly reduce its AI chip reliance on Nvidia, the worldŌĆÖs top AI chip designer.

Write to Jin-Suk Choi at iskra@hankyung.com

In-Soo Nam edited this article.

More to Read

-

Korean chipmakersSamsung to supply $752 million in Mach-1 AI chips to Naver, replace Nvidia

Korean chipmakersSamsung to supply $752 million in Mach-1 AI chips to Naver, replace NvidiaMar 22, 2024 (Gmt+09:00)

4 Min read -

Korean chipmakersSamsung to unveil Mach-1 AI chip to upend SK HynixŌĆÖs HBM leadership

Korean chipmakersSamsung to unveil Mach-1 AI chip to upend SK HynixŌĆÖs HBM leadershipMar 20, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung rallies on expectations of NvidiaŌĆÖs HBM order

Korean chipmakersSamsung rallies on expectations of NvidiaŌĆÖs HBM orderMar 20, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSK Hynix mass-produces HBM3E chip to supply Nvidia

Korean chipmakersSK Hynix mass-produces HBM3E chip to supply NvidiaMar 19, 2024 (Gmt+09:00)

3 Min read -

Korean innovators at CES 2024Samsung to double HBM chip production to lead on-device AI chip era

Korean innovators at CES 2024Samsung to double HBM chip production to lead on-device AI chip eraJan 12, 2024 (Gmt+09:00)

3 Min read -

Korean chipmakersSamsung set to supply HBM3 to Nvidia, develops 32 Gb DDR5 chip

Korean chipmakersSamsung set to supply HBM3 to Nvidia, develops 32 Gb DDR5 chipSep 01, 2023 (Gmt+09:00)

4 Min read

Comment 0

LOG IN