Samsung accelerates foray into fastest AI chip market

The chipmaker is set to mass produce 16 GB and 24 GB HBM3 chips, the industry's fastest and slimmest models

By Jun 26, 2023 (Gmt+09:00)

Hankook Tire buys $1 bn Hanon Systems stake from Hahn & Co.

NPS to hike risky asset purchases under simplified allocation system

UAE to invest up to $1 bn in S.Korean ventures

Osstem to buy BrazilŌĆÖs No. 3 dental implant maker Implacil

US multifamily market challenges create investment opportunities

South KoreaŌĆÖs Samsung Electronics Co. is speeding up its efforts to penetrate deeper into the high bandwidth memory 3 (HBM3) market, an area it has neglected relative to other high-performance chips due to its tiny share of the entire memory chip market.

But the advent of generative AI such as ChatGPT is driving the worldŌĆÖs top memory chipmaker to ramp up production of HBM chips, which boast faster data-processing speeds and lower energy consumption than conventional DRAMs.

Samsung has recently shipped samples of HBM3 products with a 16 gigabyte (GB) memory capacity with the lowest energy consumption of its kind to customers, according to industry sources on Monday.

Currently, the 16 GB is the maximum memory capacity for the existing HBM3 products and processes data at a speed of 6.4 GB per second, the industryŌĆÖs fastest.

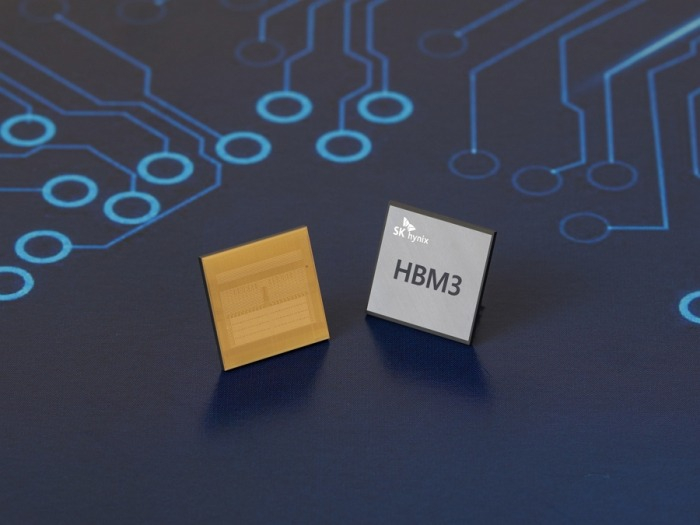

It also delivered the samples of a 12-layer 24 GB HBM3, a fourth-generation HBM chip and the industryŌĆÖs slimmest of its kind. Its smaller domestic rival SK Hynix Inc. unveiled the same-type model for the first time in the world last April.

Samsung is now ready to mass produce both types of HBM3, the sources said. In the second half, it will launch an advanced model of HBM3 memory with higher performance and capacity.

An HBM is a product made by vertically stacking DRAM chips. It is mainly used for graphic processing units (GPUs) that power generative AI platforms such as ChatGPT.┬Ā

Samsung is understood to have begun the shipment of HBM3 products to its major customers.

AMD Inc.ŌĆÖs recently announced MI 300 accelerated processing units are embedded with SamsungŌĆÖs HBM3 memory. AMD is a fabless US semiconductor company. MI 300 chips are used to power supercomputers.

The Aurora supercomputer, developed jointly by Intel Corp. and the Argonne National Laboratory, is said to be equipped with Samsung chips, according to the sources.

IN EARLY STAGES OF GROWTH

HBM was not high on the list for Samsung. It instead focused on mobile chips and high-performance computing technologies designed to improve the data processing capability and the performance of complex calculations.

The HBM market is still in its early stages of growth, accounting for less than 1% of the DRAM market.

But SamsungŌĆÖs aggressive foray into the sector is expected to shake up the HBM market, giving a leg up to the sluggish DRAM market, industry watchers said.

SK Hynix controls half of the HBM market worldwide, trailed by Samsung with a 40% stake and Micron Technology Inc. with a 10% stake, the Taiwan-based research firm said.

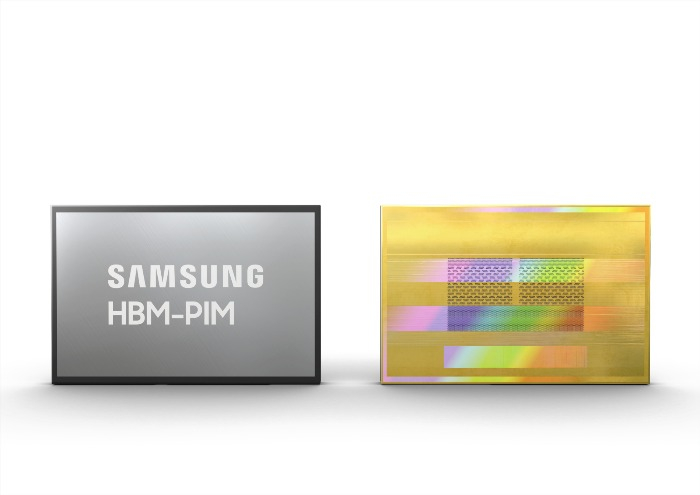

In 2021, Samsung developed HBM-PIM (processing-in-memory) integrated with an AI accelerator. It enhances the generative capability of an AI application by 3.4 times more than an HBM-powered GPU accelerator, according to Samsung Electronics.

It also unveiled CXL DRAMs, which have bigger data-processing capacity than conventional DRAMs so that it can prevent a memory bottleneck for AI supercomputers, or a slowdown in text generation and data movement.

Write to Jeong-Soo Hwang at hjs@hankyung.com

Yeonhee Kim edited this article.┬Ā

-

Korean chipmakersSK HynixŌĆÖs latest 1b nm DDR5 DRAM chip under Intel test run

Korean chipmakersSK HynixŌĆÖs latest 1b nm DDR5 DRAM chip under Intel test runMay 30, 2023 (Gmt+09:00)

2 Min read -

Tech, Media & TelecomMicron overtakes SK Hynix in shrinking DRAM market

Tech, Media & TelecomMicron overtakes SK Hynix in shrinking DRAM marketMay 25, 2023 (Gmt+09:00)

1 Min read -

Korean chipmakersSamsung rolls out industryŌĆÖs finest 12 nm DDR5 DRAM chips

Korean chipmakersSamsung rolls out industryŌĆÖs finest 12 nm DDR5 DRAM chipsMay 18, 2023 (Gmt+09:00)

3 Min read -

Artificial intelligenceSamsung, Naver to jointly develop generative AI to rival ChatGPT

Artificial intelligenceSamsung, Naver to jointly develop generative AI to rival ChatGPTMay 14, 2023 (Gmt+09:00)

4 Min read -

Korean chipmakersSamsung develops 128GB DRAM for data intensive applications

Korean chipmakersSamsung develops 128GB DRAM for data intensive applicationsMay 12, 2023 (Gmt+09:00)

1 Min read -

Korean chipmakersSK Hynix unveils industryŌĆÖs slimmest 12-layer, 24 GB HBM3 chip

Korean chipmakersSK Hynix unveils industryŌĆÖs slimmest 12-layer, 24 GB HBM3 chipApr 20, 2023 (Gmt+09:00)

2 Min read