LG takes on Big Tech AI in reasoning with Exaone Deep

The South Korean tech giant’s AI inferencing model beat global AI peers like OpenAI in various tests

By Mar 18, 2025 (Gmt+09:00)

LG Chem to sell water filter business to Glenwood PE for $692 million

KT&G eyes overseas M&A after rejecting activist fund's offer

Mirae Asset to be named Korea Post’s core real estate fund operator

StockX in merger talks with Naver’s online reseller Kream

Meritz backs half of ex-manager’s $210 mn hedge fund

LG AI Research, the artificial intelligence (AI) research and development unit of South Korea's LG Group, has unveiled Exaone Deep, an open-source AI model designed to rival industry leaders in reasoning.

The company on Tuesday announced that its AI reasoning model outperformed global rivals such as OpenAI’s GPT models, Google DeepMind’s Gemini and China's DeepSeek in scientific comprehension and mathematical logic.

The Korean AI model, which builds on LG’s large language model Exaone 3.5, demonstrates outstanding performance in complex problem-solving, with a strong focus on long-context understanding and instruction-following accuracy, LG said.

Only a handful of companies with foundation models – OpenAI, Google, DeepSeek and Alibaba – have developed AI inference models.

Machine learning (ML) is the process of using training data and algorithms under supervision to enable AI to imitate how humans learn.

AI inference is the ability to apply what the AI model learned through ML to decide, predict or draw conclusions from information never seen before.

LG pins high hopes on Exaone Deep, Korea's first AI inferencing model that can go head to head against global big tech rivals, the company said.

With the new AI inferencing model, the Korean tech giant is gearing up for the era of Agentic AI, where AI independently formulates hypotheses, verifies them and autonomously makes decisions without human instructions.

PERFORMANCE PROWESS

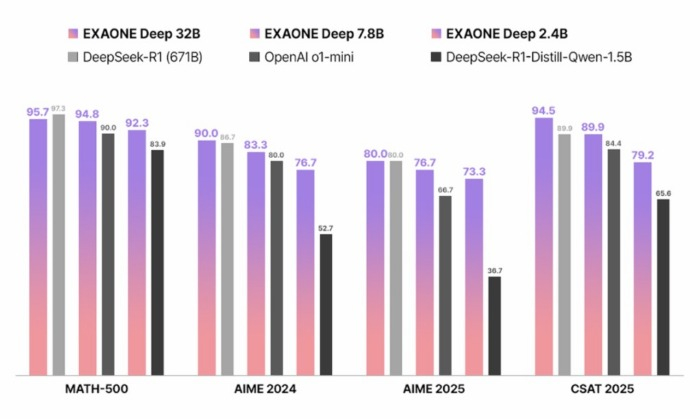

The Exaone Deep 32B demonstrated the equivalent performance at 5% the size of its competing model DeepSeek R1 with 671 billion parameters.

It scored the highest 94.5 points on the Korean college entrance exam, CSAT, math test and 90.0 points on the American Invitational Mathematics Examination (AIME) 2024.

On AIME 2025, it scored on par with the DeepSeek-R1 model.

The Exaone Deep 7.8B and 2.4B models also ranked first in all major benchmarks within the lightweight model and on-device model categories, respectively.

All three models also scored well in tests evaluating doctorate-level problem-solving abilities in physics, chemistry and biology. Especially, the Exaone Deep 7.8B and 2.4B models have achieved the highest performance, boasting excellent capabilities in lightweight and on-device sectors, above their global peers such as OpenAI’s o1-mini.

Exaone Deep also proved its strength in coding and problem-solving, underscoring its potential for applications in software development, automation and other technical fields, which require high levels of computational accuracy.

Its performance also excelled in general language understanding, securing the highest massive multitask language understanding (MMLU) score among Korean models.

Exaone Deep's evaluation results are posted on Hugging Face, a global open-source AI platform.

RACE TO DOMINATE NEXT-GEN AI INFERENCE

The unveiling of Exaone Deep marks LG’s deepening commitment to AI-driven innovation, as competition intensifies in the global race for highly capable, domain-specialized models.

Especially, cost-effective models are garnering great attention following the rise of China’s DeepSeek in reasoning abilities.

LG AI Research has trained Exaone Deep with quality datasets while scaling down its parameters.

It uses domain-specific data from LG's affiliates and only selects public data to improve accuracy in reasoning, the company said.

Last summer, LG AI Research, an institute of the group’s holding company LG Corp., unveiled Exaone 3.0, an improved version of its predecessor showcased in July 2023.

The group revealed the first version of Exaone in December 2021.

Write to Eui-Myung Park at uimyung@hankyung.com

Sookyung Seo edited this article.

-

Artificial intelligenceLG unveils Korea’s 1st open source AI to take on Meta, Google

Artificial intelligenceLG unveils Korea’s 1st open source AI to take on Meta, GoogleAug 07, 2024 (Gmt+09:00)

3 Min read -

Artificial intelligenceLG unveils upgraded hyperscale AI for future businesses

Artificial intelligenceLG unveils upgraded hyperscale AI for future businessesJul 19, 2023 (Gmt+09:00)

2 Min read -

Artificial intelligenceLG AI Research: Foundation of group’s long-term future

Artificial intelligenceLG AI Research: Foundation of group’s long-term futureFeb 27, 2022 (Gmt+09:00)

3 Min read -

Artificial intelligenceLG AI Research forms 'Hyperscale' AI alliance with 13 companies

Artificial intelligenceLG AI Research forms 'Hyperscale' AI alliance with 13 companiesFeb 23, 2022 (Gmt+09:00)

4 Min read -

Artificial intelligenceLG unveils multimodal ‘supergiant AI’ with unprecedented parameters

Artificial intelligenceLG unveils multimodal ‘supergiant AI’ with unprecedented parametersDec 14, 2021 (Gmt+09:00)

2 Min read