South Korean firms roll out AI chatbots that tug at the heartstrings

Conglomerates and startups alike tap into the market for chatbots that can mimic emotional connections with users

By Jul 19, 2022 (Gmt+09:00)

LG Chem to sell water filter business to Glenwood PE for $692 million

Kyobo Life poised to buy Japan’s SBI Group-owned savings bank

KT&G eyes overseas M&A after rejecting activist fund's offer

StockX in merger talks with Naver’s online reseller Kream

Mirae Asset to be named Korea Post’s core real estate fund operator

If you are using artificial intelligence-powered chatbot services in South Korea, you may soon be receiving messages like this: “Would you like to go for a walk?” That is, without being prompted a conversation by its user.

Korean conglomerates and startups are bullish on developing chatbots that can not only provide standardized outcomes but also derive the appropriate responses based on context and the learned personality of users.

CHECKING IN

SK Telecom Co. tweaked its digital secretary app A. (pronounced A dot) earlier this month to include a function of speaking to the user without being asked to do so.

While the app is on, the chatbot could inquire whether you’ve had lunch yet or if you enjoy a summer night, depending on a variety of factors including the weather and the time of the day.

Scatter Lab, a text-based sentiment analysis service provider, recently incorporated a similar function onto its chatbot Lee Luda.

The objective is to give off the impression of engaging with a friend, the way someone would share a funny meme with you on Instagram.

The startup plans to add additional functions that will help cultivate such an experience between the chatbot and its users.

Last January, Lee Luda was embroiled in a controversy after the chatbot began sending offensive comments it received and subsequently learned from the user comments. The fictional character was also subjected to sexual remarks by the users.

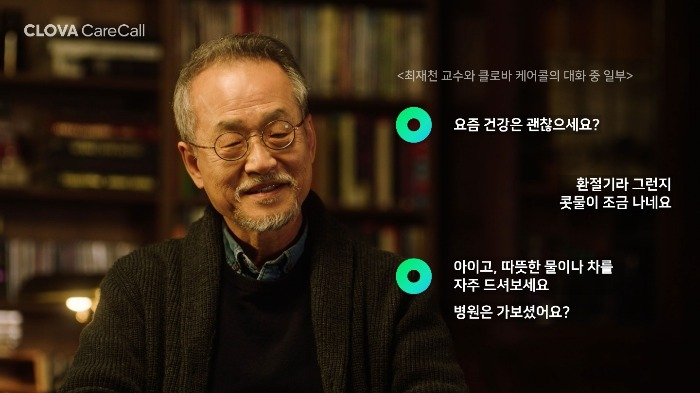

Search engine behemoth Naver Corp. plans to continue advancing its CLOVA CareCall services launched late last month.

The AI-powered service is aimed at keeping tabs on the health of middle-aged citizens who live by themselves.

The idea is to train the chatbot to extract and memorize important nuggets of information from previous conversations to use them in future ones.

Using the function, a chatbot can check up on the user to ask questions such as “Were you able to visit the clinic that you’ve been meaning to go to?” to remind the person of a doctor’s visit.

Wireless carrier KT Corp., for its part, plans to develop a hyper-scale AI dubbed KT AI 2.0 within this year that also mimics humans’ emotional intelligence.

WIDER ADOPTION

The reason why the companies are looking to incorporate emotional intelligence into chatbots is to expand their use cases.

The industry concluded that providing factual and standardized answers alone would not be profitable in the long run.

The companies are betting on the chatbots’ capability to allow its users to form emotional bonds with the AI so they can be used as virtual assistants and consultants.

Once fully advanced, the chatbots could even provide emotional support to play the role of pets.

“We are advancing our services to a point where users can open an app and communicate with it because of emotional bonds rather than a functional objective.” an SK Telecom employee said.

“Even though technological advancement solved many issues, building a solid relationship remains a difficult task,” a Scatter Lab employee said. “We are trying to get there with the help of artificial intelligence.”

Experts say the development of AI that can foster emotional connections with humans is much more complicated than that of chatbots that spew standardized answers on the weather forecast or traffic conditions.

The key is to maintain or advance the responses’ accuracy while having a more humane touch than the existing answers.

Let's look at a case of a user asking about his or her own socioeconomic status.

The chatbot should be programmed to provide comforting words and encouragement if the user turns out to be in the lower-income category, instead of just informing the person of where he or she stands.

Tech companies are looking to use a wider set of data to achieve more human-like responses.

“In order to add more humane aspects to the datasets, we are conducting a fusion research that encompasses humanities, psychology, and cognitive science,” a KT employee said.

Write to Han-Gyeol Seon at always@hankyung.com

Jee Abbey Lee edited this article.

-

Venture capitalUS and German startups funded by Mirae Asset join ranks of unicorns

Venture capitalUS and German startups funded by Mirae Asset join ranks of unicornsApr 15, 2022 (Gmt+09:00)

3 Min read -

Naver launches conversational search engine service

Naver launches conversational search engine serviceMar 18, 2022 (Gmt+09:00)

1 Min read -

Artificial intelligenceLG unveils multimodal ‘supergiant AI’ with unprecedented parameters

Artificial intelligenceLG unveils multimodal ‘supergiant AI’ with unprecedented parametersDec 14, 2021 (Gmt+09:00)

2 Min read