Artificial intelligence

KAIST says its computation engine outperforms Google's, IBM's

'FuseME raises deep learning models’ processing speed and processes much larger data than TensorFlow and SystemDS can handle'

By Jun 20, 2022 (Gmt+09:00)

2

Min read

Most Read

LG Chem to sell water filter business to Glenwood PE for $692 million

Kyobo Life poised to buy Japan’s SBI Group-owned savings bank

KT&G eyes overseas M&A after rejecting activist fund's offer

StockX in merger talks with Naver’s online reseller Kream

Mirae Asset to be named Korea Post’s core real estate fund operator

Korea Advanced Institute of Science & Technology (KAIST), a prestigious South Korean university, unveiled an artificial intelligence computation model, which it said outperforms Google's and IBM's.

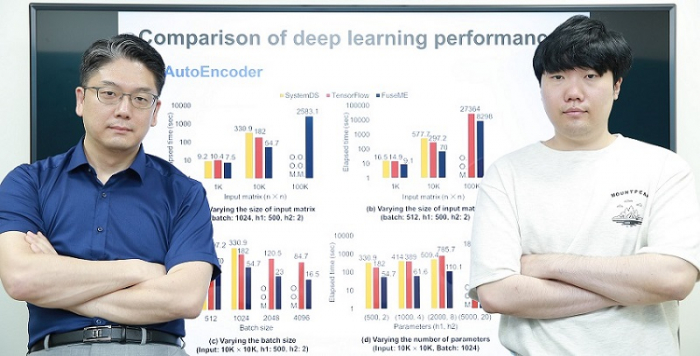

A research team at KAIST School of Computing led by Professor Kim Min-soo said on Monday it has developed FuseME, a matrix computation-fused engine that can significantly improve machine learning systems such as deep learning.

“The result of comparative evaluation with Google’s TensorFlow and IBM’s SystemDS showed FuseME increased the processing speed of deep learning models by up to 8.8 times and succeeded in processing much larger data than the other two could handle,” said the team.

The team also said the speed of the computational fusion will be up to 238 times faster than those competitors, while the cost of network communication can be reduced to as low as one-sixty-fourth.

FuseME is an upgrade of DistME, a fast and elastic matrix computation engine unveiled in 2019 by the team.

“The new technology is expected to have a significant ripple effect in industries as it can drastically enhance the scale and performance of machine learning model processing,” Kim said.

The technology was unveiled during the ACM Special Interest Group on Management of Data conference, a prestigious international academic forum on the database sector held on June 16 in Philadelphia.

TO FUSE MATRIX MULTIPLICATION

To process large-scale matrix computation in a fast and scalable way, a number of distributed matrix computations systems on top of frameworks based on MapReduce, an evolving programming framework for massive data applications proposed by Google, have been suggested, according to the KAIST team.

These systems generate and execute a query plan as a Directed Acyclic Graph (DAG) of basic matrix operators for a matrix query, the team said.

But they often fail to process data or take too long to handle data when the size of the model increases because the intermediate data of the matrix computation is suddenly stored in memory or transferred to another computer through network communication before it is properly processed.

“The existing machine learning systems could not improve performance as they excluded matrix multiplication, which is the most complex computation, while fusing the rest only. They also executed the entire DAG query plan as one simple operation,” said a KAIST official.

FuseME can handle computation by fusing all, including matrix multiplication.

The team came up with a method of determining which operations can improve AI performance and grouping them based on cost.

It developed the technology for FuseME that can generate optimal performance by considering the properties of each group, network communication speed and input data size.

Write to Hae-Sung Lee at ihs@hankyung.com

Jongwoo Cheon edited this article.

More to Read

-

-

Korean chipmakersSamsung in talks to supply customized HBM4 to Nvidia, Broadcom, Google

Korean chipmakersSamsung in talks to supply customized HBM4 to Nvidia, Broadcom, Google24 HOURS AGO

-

EnergyLS Cable breaks ground on $681 mn underwater cable plant in Chesapeake

EnergyLS Cable breaks ground on $681 mn underwater cable plant in ChesapeakeApr 29, 2025 (Gmt+09:00)

-

Business & PoliticsUS tariffs add risk premium to dollar assets: Maurice Obstfeld

Business & PoliticsUS tariffs add risk premium to dollar assets: Maurice ObstfeldApr 29, 2025 (Gmt+09:00)

-

Comment 0

LOG IN